Facebook in India: Why is it still allowing hate speech against Muslims?

The head priest was clear about what had to be done. “I want to eliminate Muslims and Islam from the face of the Earth,” he declared. His followers listened, enraptured. Delivered in October 2019, the speech by Yati Narsinghanand, head of the Dasna Devi temple in the north Indian state of Uttar Pradesh, was filmed and posted on Facebook. By the time Facebook removed it, the tirade had been viewed more than 32 million times.

The priest spelled out his vision more clearly in a speech posted on Facebook in the same month, which has received more than 59 million views. “As long as I am alive,” he promised, “I will use weapons. I am telling each and every Muslim, Islam will be eradicated from the country one day…”

Three years after it was delivered, the speech can still be viewed on Facebook. Meta, as Facebook’s parent company has been known since October 2021, failed to explain why when asked by Middle East Eye.

'The early warning signs of genocide are present in India'

- Gregory Stanton, Genocide Watch

This, experts say, is the language of genocide.

In March 2021, controversy erupted in India after a 14-year-old Muslim boy entered Narsinghanand’s temple to drink water and was brutally attacked by his followers.

In the wake of the public furore, The London Story, a Netherlands-based organisation which counters disinformation and hate speech, reported that interactions on the preacher’s fan pages rose sharply.

Narsinghanand has done little to present himself as peaceful and tolerant: violent and chauvinistic imagery are central to his brand, as they are to many who share his views. Facebook photos in December 2019 showed him and his followers brandishing machetes and swords. His targets are never in doubt: India’s 200 million-plus Muslims.

Hate speech towards Muslims

Hate speech directed at religious minorities has become a routine feature of public life in India. From 2009 to 2014 there were 19 instances of hostile rhetoric towards minorities by high-ranking politicians. But from 2014, when Prime Minister Narendra Modi’s Bharatiya Janata Party (BJP) entered government, to the start of 2022 there were 348 such instances – a surge of 1,130 percent.

Today, some genocide experts speak of a possible genocide against India’s Muslims.

In this article, we will examine how Meta, one of the biggest media organisations in the world, has become a broadcaster of genocidal hate speech through Facebook, with potentially nightmarish consequences.

Facebook has nearly 350 million users in India. It is the platform’s largest market by users, as it is for Instagram and WhatsApp, which are also owned by Meta. Ahead of the 2018 Karnataka Assembly elections, for example, the BJP created between 23,000 and 25,000 WhatsApp groups to disseminate political propaganda.

The London Story says that Facebook has “provided a megaphone for political and religious mobilisation on a scale not seen before”.

Fuzail Ahmad Ayyubi, a lawyer practising at the Indian Supreme Court who has worked on high-profile cases relating to hate, told Middle East Eye how social media platforms toxify politics.

“The cost of internet is very cheap, almost free, and smartphones are cheap too. Previously, the damage that hate speech could do was limited. Through social media, it can go to the entire country. Facebook isn’t just a vehicle for hate speech, it’s being used as a loudspeaker.”

Meta denies such criticism. It told Middle East Eye: “We have zero tolerance for hate speech on Facebook and Instagram, and we remove it when we’re made aware of it.”

But it’s instructive to examine the role of Facebook in India’s February 2020 violence, when the Muslim-majority neighbourhoods of north-east Delhi were stormed by a mob who destroyed mosques, shops, homes and cars. Fifty-three people died, 40 of whom were Muslim. Many more lost their homes and businesses.

In the months leading up to the carnage, Narsinghanand delivered a steadily intensifying stream of anti-Muslim rhetoric, some of which was shown on Facebook. Muslims protesting against discriminatory citizenship laws that threaten to disenfranchise millions of people “should find out what will happen to them the day we come out”, he declared less than two months before the massacre.

Narsinghanand’s prominence on social media has made him a celebrity. And he is more than just a hate preacher - he has explicitly incited violence against Muslims.

In December 2021, at a gathering in the northern city of Haridwar, Narsinghanand told a crowd to “update your weapons… More and more offspring and better weapons, only they can protect you.” At the same event, a fellow speaker declared: “Even if just a hundred of us become soldiers and kill two million of them, we will be victorious.” Video footage of this speech was viewed by thousands on Facebook before being removed.

Narsinghanand was arrested for hate speech after the event, before being released on bail. But Facebook continued to allow footage of his speeches to be viewed on its platform.

After the violence in Delhi in 2020, Facebook came under scrutiny. The Delhi Assembly Committee on Peace and Harmony, appointed by Delhi’s Legislative Assembly to resolve issues between religious and social groups, looked into Facebook’s involvement in the violence. But Facebook refused to appear before the committee: Ajit Mohan, the head of Facebook India, said that the assembly should not interfere in a law and order issue.

The response from Raghav Chadha, the committee’s chairman, was withering. He accused Mohan of “an attempt to conceal crucial facts in relation to Facebook’s role in the February 2020 Delhi communal violence” and accused Facebook itself of “running away” from scrutiny. In September 2020, the committee “prima facie found Facebook complicit” in the riots, stating that the company “sheltered offensive and hateful content on its platform”.

Facebook has a shameful record of being used against minorities in sensitive political environments. In 2017, nearly a million Rohingya Muslims began to flee Myanmar’s Rakhine state after being subject to a campaign of mass violence and rape. In late 2021, Rohingya refugees sued Facebook for $150bn, accusing it of failing to take action against hate speech. The company itself said that it was “too slow to prevent misinformation and hate” in Myanmar.

A Meta spokesperson told Middle East Eye that it had made significant efforts to improve the situation in Myanmar: "Meta stands with the international community and supports the efforts to bring accountability for the perpetrators of these crimes against the Rohingya. Over the years, we’ve built a dedicated team of Burmese speakers, banned the Tatmadaw [Myanmar’s armed forces], disrupted networks trying to manipulate public debate, and invested in Burmese-language technology to help reduce the prevalence of harmful content.”

Gregory Stanton, the president of Genocide Watch, predicted the 1994 Rwanda genocide five years before it happened. Earlier this year, Stanton said that “the early warning signs of genocide are present in India”. And he also highlighted similarities between the situation in India and the climate in Myanmar before the killings there.

Many Indian Muslims fear for their future. Journalist Asim Ali wrote in April of alarm that the government is unwittingly “ceding its monopoly of violence” to “right-wing vigilantes”, who are increasingly becoming “autonomous centres of power”. Indian history shows that demagogues are capable of whipping up mobs and triggering violence, as local authorities and the police look on helplessly.

Supreme Court ban, still on Facebook

The RSS (Rashtriya Swayamsevak Sangh) is a paramilitary organisation that includes Prime Minister Narendra Modi among its nearly 600,000 members. Founded in 1925 and modelled on European fascist movements, it is the main proponent of what is widely described as Hindutva, or Hindu nationalist, politics. Its adherents view Muslims and Christians in India as foreign and reject the country’s religious diversity championed by the founders of the modern Indian state.

Vinayak Damodar Savarkar was one of its earliest ideologues. In 1944, three years before Indian independence, an American journalist asked him: “How do you plan to treat the Mohammedans [Muslims]?”

Savarkar replied: “As a minority, in the position of your Negroes.”

Another prominent RSS thinker, Madhav Sadashivrao Golwalkar, approved of Hitler, writing in 1939 that Germany’s “purging the country of the semitic Race - the Jews” was “a good lesson for us in Hindustan [India] to learn and profit by”. Both Savarkar and Golwalkar are widely revered figures among RSS followers. So, increasingly, is Nathuram Godse, who assassinated Mahatma Gandhi in January 1948, and who at one time was an RSS member and student of Savarkar.

What is Hindutva?

+ Show - HideWhat is Hindutva?

The term Hindutva was first coined in 1892 by Bengali scholar Chandranath Basu, to describe the state or quality of being Hindu, or "Hindu-ness".

It took on its contemporary meaning, as that of a political ideal, in a 1923 essay by Indian activist Vinayak Damodar Savarkar. In a paper titled “Hindutva: Who is a Hindu?” Savarkar, who described himself as an atheist, wrote that “Hindutva is not identical with what is vaguely indicated by the term Hinduism.” He sought to separate the term from “religious dogma or creed,” and instead link the idea of Hinduism with that of ethnic, cultural and political identity.

Savarkar posited that to Hindus, India was both a “fatherland” and a “holy land”, distinguishing them from non-Hindus, for whom it was the former but not the latter. “Their holy land is far off in Arabia or Palestine. Their mythology and god-men, ideas and heroes are not the children of this soil. Consequently their name and their outlook smack of a foreign origin,” he wrote.

The polemicist’s definition of Hindutva would go on to form the basis of Hindu nationalist political ideology. It would be utilised by the RSS group, India's ruling Bharatiya Janata Party (BJP), and other organisations in the Sangh Parivar right-wing Hindu nationalist umbrella grouping.

What is the RSS?

The RSS, which has described itself as the world’s largest volunteer organisation with around six million members, is the main vehicle through which Hindutva ideas are spread.

It was founded in 1925 by physician Keshav Baliram Hedgewar as a movement against British colonial rule and in response to Hindu-Muslim riots. Hedgewar, heavily influenced by the writings of Savarkar, set up the RSS as a group dedicated to protecting Hindu religious, political and cultural interests under the “Hindutva” banner. Though it supported independence, it is not believed to have played any significant role in the fight for freedom from British colonial rule.

Hedgewar was highly critical of the Congress Party, India’s main independence movement, for both its diversity and its political hierarchy. Instead he modelled his RSS members as uniformed, equal volunteers, who practised their discipline with daily exercise and paramilitary training drills.

What are the links between the RSS and fascism?

After Hedgewar’s death in 1940, Madhav Sadashivrao Golwalkar took charge of the RSS group. Golwalkar, one of the ideological architects of the RSS, took inspiration from European fascism, including Nazi Germany.

In his 1939 book We, or, Our Nationhood Defined, Golwalkar said: “To keep up the purity of its race and culture, Germany shocked the world by her purging of the country of the Semitic races - the Jews. Race pride at its highest has been manifested here… a good lesson for us in Hindustan to learn and profit by.” The RSS has since distanced itself from the book.

Earlier this year, a teacher in Uttar Pradesh was suspended for posing the examination question: “Do you find any similarities between Fascism/Nazism and Hindu right-wing (Hindutva)? Elaborate with the argument.”

It’s a subject that was meticulously researched by Italian historian Marzia Casolari, who in her book In The Shadows of the Swastika utilised archives from Italy, India and the UK to outline the relationship between Hindu nationalism and Italian fascism and Nazism.

Hindutva figures have been linked to extremist right-wing European figures in more recent years too: Norwegian mass murderer Anders Breivik hailed the Hindu nationalist movement as a “key ally to bring down democratic regimes across the world”, listing the websites of the RSS and BJP among others.

What role does the RSS play in Indian politics?

In 1948, the RSS was banned by the Indian government after one of its members, Nathuram Godse, assassinated anti-colonial hero Mahatma Gandhi. The RSS said he was a rogue extremist who had left the group by the time of the killing - something Godse’s own family members have since disputed.

A year later, the ban was lifted and an official investigation absolved the RSS of involvement in the assassination. Since then the group has recovered to become one of the biggest forces in Indian society.

It gained particular prominence in the 1980s after calling for a Hindu temple to be built over the Barbi mosque - a 16th century Muslim place of worship in Ayodhya - because it believed the Hindu god Ram was born there. The mosque was destroyed by Hindu extremists in 1992, and subsequent riots, allegedly spurred on by RSS "foot soldiers", resulted in the death of around 2,000 people, mostly Muslims.

The group gained popularity following the incident, as did the BJP - often referred to as the political wing of the paramilitary movement - which subsequently won parliamentary elections in 1996 and 1998.

What are its links with prime minister Narendra Modi?

In 2002, extreme Hindutva nationalist groups belonging to the Sangh Parivar coalition were blamed for instigating the Gujarat riots, during which 790 Muslims and 254 Hindus were killed. The then chief minister of Gujarat, Narendra Modi, was accused of failing to stop the violence. He was banned from entering the UK and US on that basis - a boycott which has since been reversed.

Modi, who has been Indian prime minister since 2014, is a longtime RSS member. Following his re-election to office in 2019, a study found that of 53 ministers from the BJP in Modi’s government, 38 had an RSS background (71 percent). That share had risen from 61 percent in his first term.

That influence can be seen in policy areas such as education, where RSS efforts have led to some Indian states teaching Hindu scripture as historical fact.

Does the RSS have influence beyond India?

Yes. While it began as a 17-member cadre in Hedgewar’s living room in Nagpur, it now has more than 80,000 shakhas (branches), thousands of schools across India, and membership among nearly three-quarters of government ministers.

It also has a presence around the world: its overseas units, known as Hindu Swayamsevak Sangh (HSS), are reportedly in 39 countries, including the United States, Australia and the UK.

While the shakhas abroad provide a means for community activities and charity, it has also been seen as a key vehicle - along with the Overseas Friends of BJP (OFBJP) - for mobilising votes for Modi. As well as encouraging friends of family to vote back home, Hindutva adherents abroad have also been known to provide expertise and funding to help the BJP electoral machine.

They are also involved in organising high-profile mass rallies held by Modi during his overseas trips, such as the “Howdy Modi” event in Texas in 2019 which was attended by then-US President Donald Trump and 50,000 others.

In September 2021, Democratic Party officials in New Jersey accused Hindutva organisations of “infiltrating all levels of politics” in the US, including in efforts to successfully block a 2013 congressional resolution to warn against the Hindu nationalist movement.

The Hindu nationalist movement has sparked controversy in the UK too: in 2019, right-wing anti-Muslim activists targeted British Hindus with text messages urging them not to vote for the UK Labour Party, accusing it of being “anti-India” and “anti-Hindu”.

And in September 2022, after the weeks-long confrontations between Hindu and Muslim communities in Leicester, the Muslim Council of Britain condemned what it described as "the targeting of Muslim communities in Leicester by far-right Hindutva groups". Videos on social media showed men in balaclavas near mosques chanting “Jai Shri Ram” (Glory to Lord Ram), a slogan used by far-right Hindus during anti-Muslim rallies.

The scenes came just days after calls mounted for the UK government to ban a speaking tour by Modi ally and nationalist activist Sadhvi Nisha Rithambara. Organisers called off the tour on Friday, citing her ill health.

Today the RSS is more influential than ever - and Facebook allows its most militant ideologues to spread their ideas further than they otherwise could.

Suresh Chavhanke is an RSS member and managing director of Sudarshan News. Two episodes of his show, Bindas Bol, broadcast on television and online, have been banned by the Supreme Court of India for targeting Muslims. But Chavhanke can spread his message another way - on Facebook. In a video on his official Facebook page, viewed more than 200,000 times before it was taken down, he administered an oath before a crowd: “We pledge, until the day we die, in order to make this country a Hindu Rashtra [nation]… we will fight, we will die and if need be, we will kill.” Chavhanke’s official Facebook page is yet to be taken down.

At the height of the controversy about a Muslim teen being beaten in Narsinghanand’s temple, Sudarshan News Channel fans streamed a Facebook Live video showing Chavhanke backing Narsinghanand. Chavhanke told the crowd that “with so many Hindus around it is time to determine the war strategy”. The event then becomes increasingly macabre. “I am ready to give my life and take lives,” a lady in the crowd shouts. She presents two swords to Chavhanke and Narsinghanand as the crowd roar in approval.

Pushpendra Kulshrestha, a prominent journalist and another RSS media figure, engages in anti-Muslim hate speech. At the time of publication, he still had a verified page on Facebook and numerous fan pages. Kulshrestha uses his influence to push anti-Muslim conspiracy theories. In one, which has four million views on Facebook, he says: “Those Muslims that you consider as just owners of small tyre repair shops have a 1,000 years old agenda in their mind when deciding to set up their shops on national highways.” At the time of publication, this speech, as well as his verified page and numerous fan pages, were all still accessible on Facebook.

Conspiracy theories like Kulshrestha’s often hinge on the idea that even innocuous activities are part of a Muslim “jihad” against India.

Mohammad Rafat Shamshad, a Supreme Court lawyer who has worked on high-profile cases relating to Muslims and hate speech, told Middle East Eye: “When conspiracy theories against the majority or the nation are created, they are bound to become sensational issues. Platforms like Facebook make them available everywhere in the country, in small villages and big cities. Once you have so many users buying into these false narratives as conspiracy, it becomes a serious problem for the social fabric.”

This is partly, Shamshad explained, because many users of social media are unable to discern whether content is true or false. “Considering the low quality of education available for many people, something a young person sees online can seem to them to be a sacrosanct truth.”

He also believes that Facebook’s amplification of conspiracy theories is no minor issue. “When the passion of youth is aroused through this process, the entire country’s politics get influenced - this shapes people to consolidate vote banks.”

We put Shamshad’s criticisms to Meta among other questions. It did not respond to this specific assertion.

Safeguards ‘inadequate’

So why does Meta, the world’s biggest social media company, give a platform to such rhetoric?

Hate speech, it must be stressed, contravenes Facebook’s rules. Meta defines it as an attack on people based on “protected characteristics”, including race, religious affiliation, and caste. Engaging in hate speech on Facebook is not allowed: “freedom of expression requires security of the body, protection from non-discrimination, and privacy,” says Meta’s general human rights report, published in July 2022.

Part of the problem is the persistent failure to identify murderous rhetoric on the platform. Facebook largely relies on moderating content through artificial intelligence (AI), which has been deemed ineffective by some of its own former employees. The challenges of identifying hate speech across a range of regional Indian languages and dialects make this task even harder.

In May 2021, Meta told the United States Committee on Energy and Commerce that the company has more than 80 third-party fact-checking companies globally, working in over 60 languages. Meta told Middle East Eye that content in 20 Indian languages is reviewed on Facebook: “We have a network of 11 fact-checkers in the country who have the ability to fact-check in 15 Indian languages plus English.”

Frances Haugen, a former product manager at Facebook turned whistleblower, said in 2021 that the company’s resources are in practice inadequate: hate speech - especially in regional Indian languages - is not picked up by Facebook’s AI and regularly passes under the radar.

Ritumbra Manuvie, a senior researcher at the Security, Technology and E-Privacy group at the University of Groningen, and executive director of The London Story, analysed Facebook’s use of AI for content moderation. She found that the technology is unable to detect thinly veiled content within its context and environment. “Most of what’s taken down is profanity and spam,” she said.

Middle East Eye put this specific allegation to Meta, but received no response.

The problem of moderation also goes deeper than any alleged technical issues. Facebook’s business model makes the platform inherently conducive to hate speech. It works to maximise user engagement, which increases advertising revenue. This means that Facebook’s algorithms favour content that triggers comments, likes and other reactions. Such content can often be controversial, sensationalist and hateful.

Facebook has long known about the problem: an internal presentation in 2016 found that “64 percent of all extremist group joins are due to our recommendation tools”.

In 2018, a Facebook task force found a correlation between maximising user engagement and increasing political polarisation. The task force proposed several actions to remedy political polarisation, including changing recommendation algorithms so that Facebook users are directed towards a more diverse range of groups. But it also acknowledged that this strategy would hurt Facebook’s growth. In the end, most of the proposals weren’t adopted.

Since 2019, Facebook seems to have taken the issue of non-discrimination more seriously, ensuring that its users aren’t being shown particular adverts because of their ethnic background, as well as countering allegations of anti-conservative bias in the United States. But critics say that despite these reforms, Facebook’s algorithms still encourage engagement with hate speech and conspiracy theories.

Middle East Eye asked Meta whether Facebook promoted hate speech. The company pointed to comments made by founder and CEO Mark Zuckerberg on 6 October 2021, when he said that moral, business and product incentives all indicated the opposite. “The argument that we deliberately push content that makes people angry for profit is deeply illogical," Zuckerberg said. "We make money from ads, and advertisers consistently tell us they don't want their ads next to harmful or angry content.”

Leaked internal documents in 2021 showed that Facebook was deleting less than five percent of hate speech posted on its platform

But Meta has previously been slow to act against Islamophobia on its platforms. In 2021, Time magazine contacted Facebook about a video in which a preacher called for Hindus to kill Muslims. It was then taken down - but by then it had already attracted 1.4m views.

This is in contrast to the experience of Manuvie and her team. They have monitored Facebook in India, and reported more than 600 pages that persistently spread hate: only 16 of these pages were removed.

Meta did not respond to Middle East Eye’s request for a response to these findings.

On another occasion, Time reported, Facebook banned an anti-Muslim hate group with nearly three million followers, but left its pages online for several months. Some of these depicted Muslims as green monsters with grotesquely long fingernails.

Meta told Middle East Eye: “While we know we have more work to do, we are making real progress. We remove 10 times as much hate speech on Facebook as we did in 2017, and the amount of hate speech people actually see on our services is down by over 50 percent in the last two years.”

But leaked internal documents in 2021 showed that Facebook was deleting less than five percent of hate speech posted on its platform.

How is this allowed to happen?

In September 2021, Haugen presented evidence to the US Securities and Exchange Commission that “Facebook knowingly chose to permit political misinformation and violent content/groups” to protect its business interests. In effect, she explained, the company had to adapt to India’s political environment in order to maximise its profits.

Prime Minister Modi has long displayed an interest in Facebook - and vice versa. Zuckerberg hosted him at Facebook’s headquarters in California in September 2015, introducing Modi to his parents and even changing his profile picture to back New Delhi’s Digital India programme to increase internet access nationwide.

During the past few years, the relationship between the BJP and Meta has only strengthened. In 2020, the Wall Street Journal reported the conclusion of Facebook’s safety team that Bajrang Dal, a Hindu nationalist group, supported attacks on minorities. This followed an incident in June that year when a Pentecostal church near New Delhi was stormed and its pastor attacked. Bajrang Dal claimed responsibility in a video that racked up nearly 250,000 views on Facebook.

But, the Wall Street Journal reported, Facebook’s security team opposed banning the group from the platform, warning that employees in India could face violence from Bajrang Dal if it did. As Middle East Eye prepared this article for publication, the group’s page remained up on Facebook. Significantly, the security team also warned that banning the militant group could hurt Facebook’s business by angering politicians in the BJP.

MEE asked Meta why it has not banned Bajrang Dal. It received no response.

In September 2020, following reports in the Wall Street Journal and Time magazine about hate speech on Facebook, India’s minister for information and technology, Ravi Shankar Prasad, wrote to Zuckerberg. Prasad criticised the “collusion of a group of Facebook employees with international media”, describing it as “unacceptable” that the “political biases of individuals impinge on the freedom of speech of millions of people”. The message seemed clear: don’t act against the BJP’s interests.

Less than a month later, Ajit Mohan, the head of Facebook India, was questioned by a parliamentary panel. During the hearing, opposition politicians criticised Facebook for failing to remove inflammatory content posted by accounts connected to the party.

Since then, further evidence has emerged of the close relationship between Facebook and the BJP. In March 2022, for instance, a joint analysis by The Reporters Collective and ad.watch of 536,070 political advertisements in India concluded that between February 2019 and November 2020, Facebook charged the BJP less for election adverts than other political parties. On average, Congress, India’s largest opposition party, paid nearly 29 percent more to show an advert a million times than the BJP did.

The report also stated that Facebook allowed surrogate advertisers to promote the BJP in elections - while blocking nearly all surrogate advertisers for the rival Congress Party.

Middle East Eye asked Meta if it was playing a partisan role in Indian politics. It replied: “We apply our policies uniformly without regard to anyone’s political positions or party affiliations. The decisions around integrity work or content escalations cannot and are not made unilaterally by just one person; rather, they are inclusive of different views from around the company, a process that is critical to making sure we consider, understand and account for both local and global contexts.”

Hindutva and conspiracy theories

An investigation by The Intercept last year reported that Facebook had banned only one Hindutva group globally, in contrast to hundreds of Muslim groups. Pro-RSS Facebook pages regularly promote the growing conspiracy theory of the Love Jihad, which claims that Muslim men are seducing Hindu women as part of an Islamic plot.

In 2021, Time reported an internal Facebook presentation which acknowledged that the RSS shares “fear-mongering, anti-Muslim narratives” targeting “pro-Hindu populations with V&I [violence and incitement] content”. Such behaviour contravenes Facebook’s rules - but the RSS has not been banned from Facebook.

According to Time, “Facebook is aware of the danger and prevalence of the Love Jihad conspiracy theory on its platform but has done little to act on it, according to internal Facebook documents seen by Time, as well as interviews with former employees. The documents suggest that 'political sensitivities' are part of the reason that the company has chosen not to ban Hindu nationalist groups who are close to India’s ruling Bharatiya Janata Party (BJP)."

In a statement to Middle East Eye, Meta disputed that it enforces its rules selectively, saying: “We ban individuals or entities after following a careful, rigorous, and multi-disciplinary process. We enforce our Dangerous Organizations and Individuals policy globally without regard to political position or party affiliation.”

But this statement contradicts a Wall Street Journal report in September 2021, which alleged the company exempts millions of its high-profile users from its usual rules through a programme known as “XCheck”. Many of these users, including political figures and media personalities, “abuse the privilege, posting material including harassment and incitement to violence that would typically lead to sanctions,” the WSJ reported.

The WSJ also revealed that Ankhi Das, Facebook’s public policy director for South and Central Asia, opposed banning politician T Raja Singh from the platform, even though he had called Muslims traitors and threatened to raze mosques. Das told staff that applying hate speech rules to politicians close to the BJP would “damage the company’s business prospects in the country”. Following an outcry, Facebook banned Singh and Das resigned.

In 2019 Facebook commissioned a human rights impact assessment (HRIA) of India. It was conducted by Foley Hoag LLP, a US-based law firm. In January this year, more than 20 human rights groups called on Meta to release the full report. It has still not been published.

In July this year, Meta released its first general human rights report, which did include a short summary of the HRIA. It told Human Rights Watch that it does not “have plans to publish anything further on the India HRIA”.

Human Rights Watch responded in a statement that the summary “gets us no closer to understanding Meta’s responsibility, and therefore its commitment to addressing the spread of harmful content in India”.

Manuvie of The London Story called the summary a “cover-up of its [Meta’s] acute fault-lines in India”, which showed that Meta’s “commitment to human rights is rather limited”. Pro-democracy campaigner Alaphia Zoyab was likewise unimpressed. “I’ve never read so much bull***t in four short pages,” she said.

In a statement to MEE, a Meta spokesperson said: “We balance the need to publish these reports while considering legitimate security concerns. While we don’t agree with every finding, we do believe these reports guide Meta to identify and address the most salient platform-related issues.”

We studied Meta’s general human rights report carefully. It said that the Human Rights Impact Assessment found that Meta had made “substantial investments in new resources to detect and mitigate hateful and discriminatory speech”.

But the HRIA, the general report stated, noted that “human risks” could be created by Facebook users in India, and that “Meta faced criticism and potential reputational risks” as a result. Strikingly, the report noted that the HRIA “did not assess” any “allegations of bias in content moderation”. It said that “in 2020-2022 Meta significantly increased its India-related content moderation workforce and language support” so that it now has reviewers “across 21 Indian languages” (the constitution recognises 22, although many more are spoken).

All this suggests that more hate speech should be identified on Facebook – but says nothing about the failure to remove hate speech once it has been identified; or cases of users being exempted from the rules.

Meta’s human rights report also says that the company will “expand participation in our Resiliency Initiative, which empowers local communities with digital tools to combat hate, violence, and conflict”.

We looked at the Resiliency Initiative: it seems to aim to educate the user on unwittingly engaging in hate speech. “Are you relying on common stereotypes or inaccurate assumptions?” its Step-by-Side Guide to Social Media asks, warning that this “could reinforce rather than break down barriers”.

Facebook's guidance is, of course, irrelevant for anyone determined to spread hatred towards minorities

Users are also encouraged to ask themselves: “How will your audience respond to this message?” and “If you’re unsure, test it out!” This guidance is, of course, irrelevant for anyone determined to spread hatred towards minorities. Indeed, the influence of the likes of Narsinghanand depends upon defying the policy.

The general human rights report also promises to expand Meta’s “search redirect program”, which it says is a “countering violent extremism” initiative that directs hate and violence-related search terms towards “resources, education, and outreach groups that can help”.

But the problem is that in Modi’s India, extreme hate speech has become part of the normal political discourse. None of Meta’s promises come close to stemming the increasing spread of misinformation and hate speech.

Haugen believes that, notwithstanding its claims to be making improvements, there are still serious problems with the company’s policies. Speaking at a webinar hosted by several American human rights and civil society groups about the general human rights report, she said: “Facebook’s report points [out that] they have an oversight board that people can appeal to, that they’re transparent about what they take down… But the reality is that they won’t give us even very basic data on what content-moderation systems exist in which languages, and (on) the performance of those systems.”

In a statement to Middle East Eye, a Meta spokesperson said: “We invest billions of dollars each year in people and technology to keep our platforms safe. We have quadrupled - to more than 40,000 - the number of people working on safety and security, and we’re a pioneer in artificial intelligence technology to remove hateful content at scale.”

A threat to democracy

Meta is now under more scrutiny in India than ever before. The Delhi Legislative Assembly condemned the company after the 2020 Delhi killings. When Ajit Mohan, head of Facebook India, refused to appear before the assembly, he filed a petition to the Supreme Court challenging the summons.

The court’s judgment in July 2021, a year after the riots, rebuked Facebook. India has “a history of what has now commonly been called ‘unity in diversity’,” it observed. “This cannot be disrupted at any cost or under any professed freedom by a giant like Facebook claiming ignorance or lack of any pivotal role.”

The court was also damning on Facebook’s influence in India. “Election and voting processes, the very foundation of a democratic government, stand threatened by social media manipulation,” it said.

When the Delhi Legislative Assembly finally grilled Shivnath Thukral, Facebook’s public policy director in November 2021, he refused to answer whether Facebook took prompt action on hate content during the 2020 violence, sticking to the line that the query pertained to a law and order issue. “By stonewalling my questions, or by reserving your right to reply, you are frustrating the objective of this committee,” the committee chair told him.

MEE put both the statements made by the Supreme Court and by the Delhi Legislative Assembly committee chair to Meta, but received no reply.

Indian law forbids “words, either spoken or written” that cause “feelings of enmity, hatred or ill-will” between religious (and other) communities. Many legal experts therefore argue that the problem of anti-Muslim hate speech is existing laws not being enforced. This suggests that, as matters stand, Meta and Facebook will only change if the social media giant decides to do so itself.

We asked Fuzail Ahmad Ayyubi, the Supreme Court lawyer who has worked on cases relating to hate speech, what should be done.

“My demand is not that Facebook should be subject to more laws,” he said. “Internally [within Meta] there should be a mechanism to take care of the issue. There have to be more precautions to stop the misuse of the medium. Our problem is where Facebook’s intention is concerned.”

Mohammad Rafat Shamshad, also a Supreme Court lawyer, believes that “if the existing law is applied equally then probably things will improve… the discriminatory application of the law is a big problem." Like Ayyubi, Shamshad argues that Facebook’s problem is its intention, not its competence. “Facebook can create a mechanism,” he said, “through which they can identify groups that are propagating hatred and put a scanner on them. If a group or individual is involved in creating hate speech, why can’t you ban them?”

But doing so, he suggests, is not high on Facebook’s list of priorities. “If the revenue generated is so high, it might not be important for Facebook to stop the hate speech.”

In other words, the risk for Facebook is the potential damage to its business if it makes serious attempts to remove hate speech.

This is all complicated by how hate speech is now part of everyday conversation in Indian politics. Consider the RSS: despite its fascist-inspired origins, it has become as mainstream in India as it is possible to be. It promotes anti-Muslim hate speech and misinformation but also educates millions of young Indians in its schools; has its own trade unions; and has opened a training school for people who want to join the army. The ruling BJP is effectively its political wing. How can Facebook ban it as a “dangerous organisation” and still expect to maximise its profits in India?

We put the criticisms by Ayyubi and Shamshad to Meta. It did not respond directly to these.

We asked Shamshad for his opinion on what the stakes are for Indian Muslims: he is, after all, a prominent Indian Muslim lawyer confronted with the consequences of escalating Islamophobic politics.

“There is a possibility,” he said, “that in sporadic riots, a large number of people may be killed in various pockets of the country. That risk is constantly there.”

Could there be a genocide?

“Country-wide genocide is not an imminent risk right now. But these [social media] platforms are contributing to weakening democratic norms. They are increasing hatred between communities. If this continues, things will get worse.”

If hatred and violence towards Muslims in India escalates during the coming months and years, then Facebook and Meta may be blamed for enabling a climate of hatred in a country with a well-documented heritage of horrific communal violence.

Mark Zuckerberg might care to reflect on that.

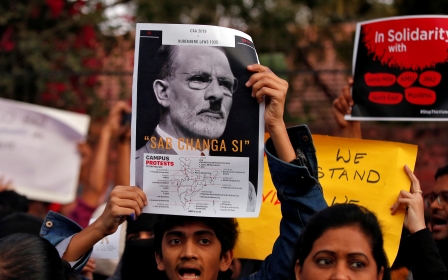

Main photo: A protester marches against violence in New Delhi in February 2020, in a month where Muslim-majority neighbourhoods were stormed by a mob (Reuters).

Middle East Eye propose une couverture et une analyse indépendantes et incomparables du Moyen-Orient, de l’Afrique du Nord et d’autres régions du monde. Pour en savoir plus sur la reprise de ce contenu et les frais qui s’appliquent, veuillez remplir ce formulaire [en anglais]. Pour en savoir plus sur MEE, cliquez ici [en anglais].